Imagine a AI That Discovers Things on Its Own?

Relax, sit back, and watch your AI do the heavy lifting.

Imagine a world where new discoveries happen on their own. You simply tell your AI everything you know, and it puts the pieces together. It collects data, emails people who might have more information, figures out what you’re looking for, asks them questions, incorporates that knowledge, and—after days, weeks, or even months of scanning and analyzing your data—comes up with a new solution. It sounds like magic, and this kind of magic is just around the corner.

There’s only one problem: have you ever wondered if the AI’s information is actually true, or if it’s just a well-crafted lie? Would you trust an AI solution that emerges from a black box with no clear references or evidence to verify its sources?

Let me share how my friend Raf and I tackled this issue head-on by creating a system that makes sure AI doesn’t just sound convincing, but is actually correct. We call it ImpliedAI.

If you find something in this newsletter that you find interesting, please do share it with a friend or a colleague who might also find it useful. As much as I enjoy writing for 200 people, I’d rather be writing for 200k.

In this newsletter:

The deceptive nature of AI

The quest for truth

The opportunity beyond ChatGPT

Did I just say that AIs lie to you? Absolutely—often without you even realizing it.

Developers like us know that AIs lie because it’s easy to check with something like computer code: the code either works or it doesn’t. When it doesn’t work, we can see what’s causing it to fail and adjust our prompts until it works. Detecting a lie in spoken or written text is hard.

Here is a simple example from a day to day life. Imagine you're asking an LLM, which we'll call "ChatBot," about the weather:

User: "ChatBot, what's the weather like outside today?"

ChatBot: "It's a sunny day with clear skies!"

This might sound perfectly reasonable, but today it’s actually overcast with a high chance of rain. You won’t know if it’s true or false until you look out of the window or go outside. Until then, you are at the mercy of an LLM response.

Why does it sound so convincing?

Confident language – The AI’s confident tone makes us trust its information.

Plausibility – We want it to be sunny, so we’re inclined to believe the good news.

No immediate verification – Unless we actually check outside, the lie (or error) goes undetected.

Why is the ChatBot making this error? A few possible reasons:

• It might have looked up the wrong weather report, the wrong location, or the wrong date. Because it sounds confident, we don’t question it.

• It could be using outdated or biased training data. If sunny days were more common in its dataset, for example, it might be biased toward reporting sunshine over rain. We have no insight into its training data.

Of course, people lie too. The weather man is wrong more often than he’s right. If you go outside without an umbrella and it rains though, the consequences are not a big deal in most cases, but if you trust a medical advice that wasn’t true, the consequences could be death. No matter how often the AI tells you it is not a doctor, once you are used to following its every word, you are very likely to follow unverified advice for anything else.

How do we fix this. How do make an AI that will never lies?

Easy, here’s how we do it on ImpliedAI.com

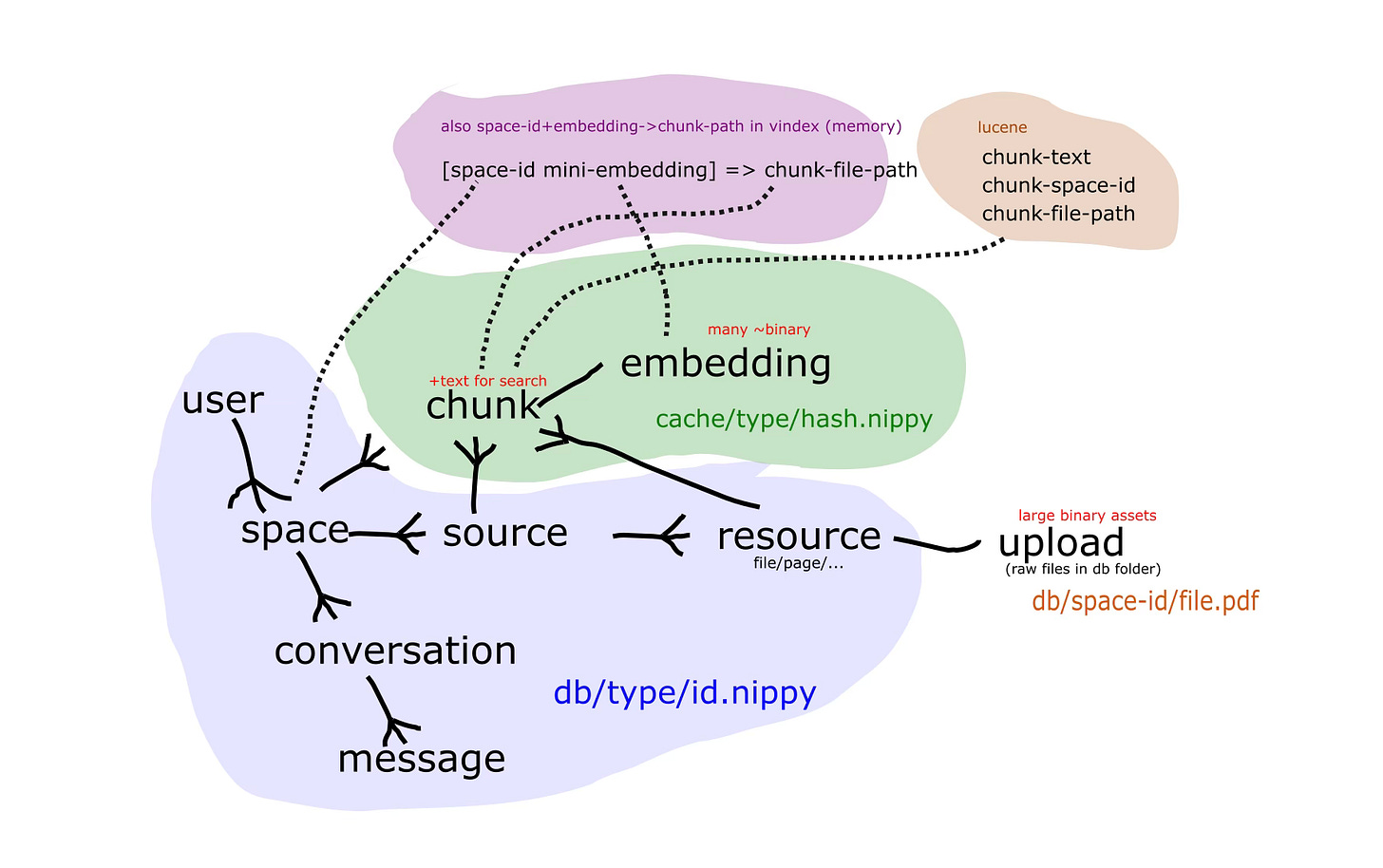

If that diagram looks overwhelming, I don’t blame you. I’m joking when I say it’s easy—there are days I wish the internet didn’t exist. But there are also days when my curiosity takes over, and those days make all the difference.

We make AI reference thousands of pieces of information, compiling them into a cohesive answer that tells you exactly what you need to know with a citation to the original source. Achieving this is fun, but it’s also as difficult as it sounds.

When Raf and I developed this system back in August, none of the AI companies offered anything similar in their chat apps. Now, only Google Notebook does something along these lines; Claude and ChatGPT still cannot support documents larger than a relatively small file size. Grok seems to be making good progress, though.

So, how do we actually make AI tell the truth?

Pre-prompt the model – We literally tell the AI: “You are a system that will never lie and will never make up information without having a clear source…”

Provide more data – The best way to solve the lack of information problem is by giving the AI all the data it needs.

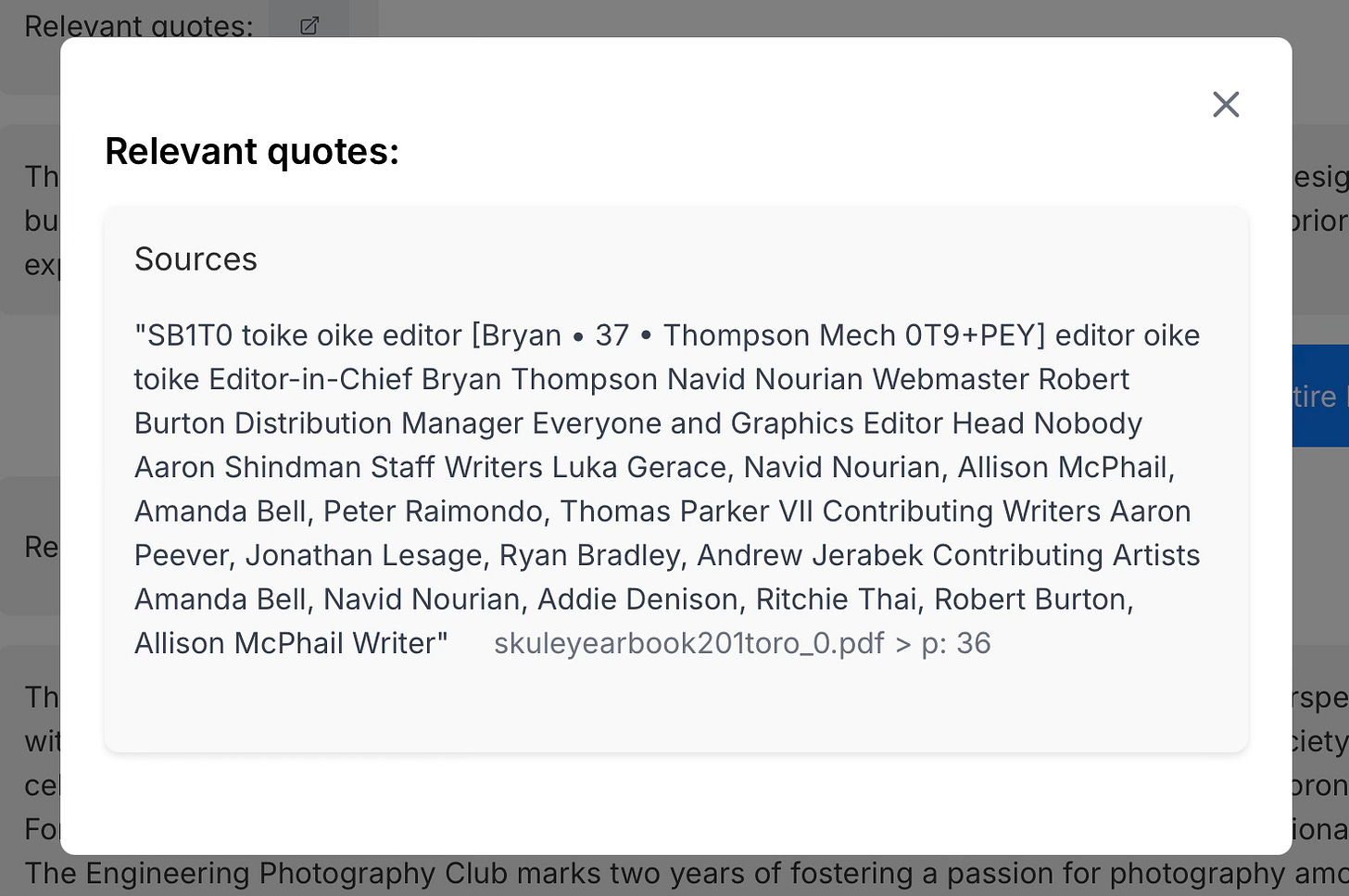

Include citations – We break documents into small chunks (embeddings) and store them as both matrices and plain text. When you query the AI, it references the most relevant embeddings and cites exactly where it got the information. [Ok, it’s quite a bit more complicated than that. If you want to read a technical post of how this is done, I am going to release another post for the paying subscribers.]

It might sound straightforward, but injecting, parsing, storing, and referencing thousands of data points quickly gets complicated. Still, the concept is simple: citations reduce the AI’s ability to lie undetected.

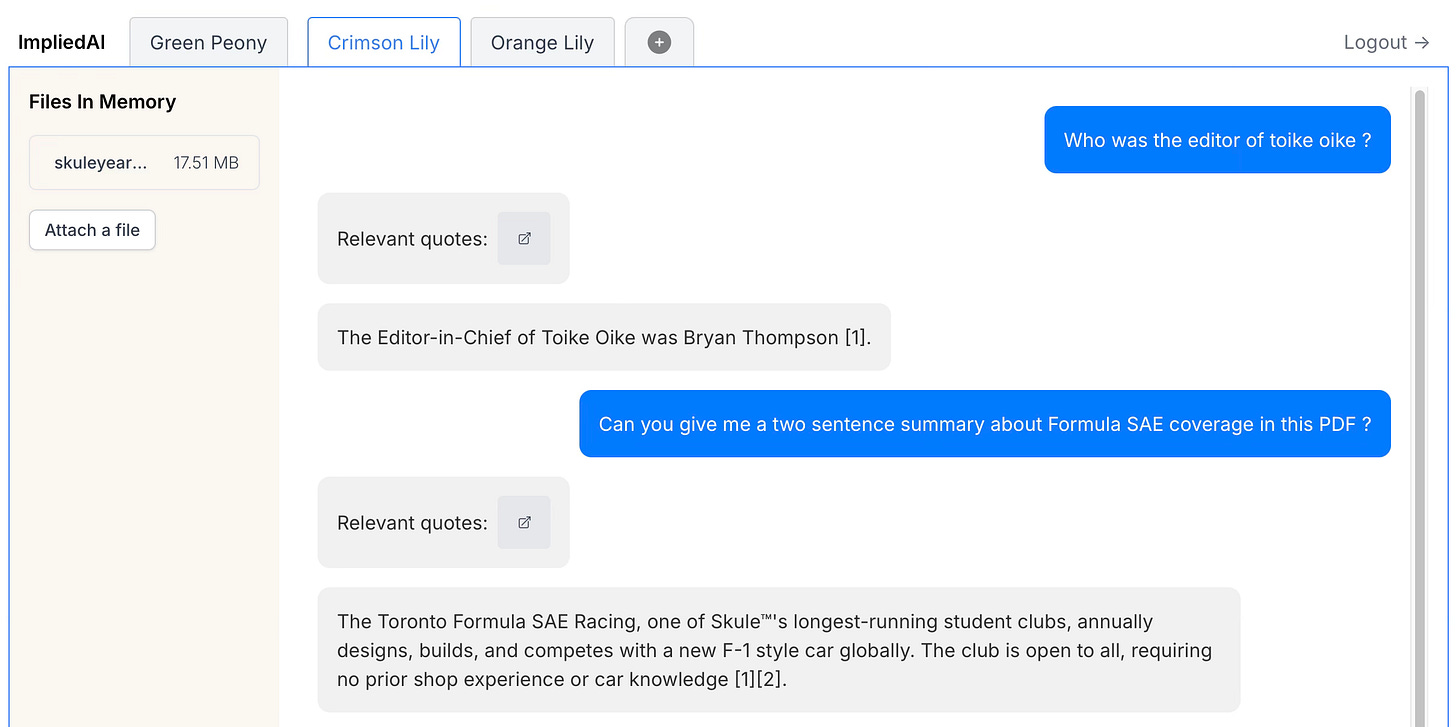

If you’d like to see this in action on your now data, go to ImpliedAI.com, open the playground, add a couple of PDFs, and ask some questions. It works with TXT and PDF files, though it takes a few minutes to process them. I don’t guarantee it works well atm, as it’s still a playground. Ultimately, my plan is to offer an on-demand solution customized to each organization’s needs, rather than a one-size-fits-all SaaS that tries to do everything poorly.

Let me show you a practical example —

I uploaded a PDF of an old yearbook from my college days into ImpliedAI. Then I asked, “Who was the editor of Toike Oike?” (a humorous engineering newspaper). The system responded correctly, pointed me to the right document, and showed me exactly which page and paragraph confirmed the answer.

This example might be simple, but it illustrates how you can make AI provide verifiable answers when you feed it the right information. The only constraint is how much data you’re willing to give it, and how much computing power you have.

Where does this lead?

With enough data, we could get answers about absolutely everything. Imagine uploading all the data about your personal life, your business, your competitors, and your work, and then letting an AI continuously search for insights. You wouldn’t even need to know the questions—the AI would figure them out from the data itself.

We already do this with ImpliedAI. If we identify something meaningful, we can search through all the relevant data for more connections. The main question is whether you’d trust a company like OpenAI with your private data.

In theory, plenty of companies already host their data in the cloud, hoping those agreements keep their data secure. In practice, however, many companies have been leaving the cloud because of cost and security concerns. After all, your knowledge is your weapon. If you store everything with someone else, do you really own anything?

The opportunity beyond ChatGPT

We stand on the brink of an era where AI isn't just a tool but a collaborator in our quest for knowledge and innovation. The promise of AI discovering things on its own is tantalizing, but it comes with the responsibility of ensuring the truthfulness of its findings. With ImpliedAI, Raf and I have taken steps to ensure that AI not only assists but also informs with integrity.

As we move forward, the integration of AI into our lives will be defined by how well we can trust these systems. The implications are profound:

Will we embrace a future where AI not only enhances our capabilities but does so with an unyielding commitment to truth?

Can we create a symbiotic relationship where AI leverages our collective knowledge to push the boundaries of what's possible?

The answers to these questions will shape not just our technologies but our society. The journey from here involves not just technological advancements but also a philosophical commitment to transparency, accountability, and ethical innovation.

So, let's not just imagine an AI that discovers on its own; let's envision one that does so with the same honesty and dedication we expect from ourselves. The future of AI is not just about what it can do, but about how true it can be to the data, to us, and to the pursuit of knowledge.

Join us in this quest for truth, and let's build a future where AI doesn't just reflect our world but helps us understand it more deeply, accurately, and truthfully than ever before.

If you want to learn more about the technical side of ImpliedAI, please subscribe to the paid version of The Novice. If you’d like to bring these systems into your organization, reply to this email and let’s chat.

As always my dude, I like the way you think. This is great.