Vibe Code Transformation: More Planning, Less Building = Better Results.

There’s a lot of chatter on the internet coming from experienced developers, talking how AI coding is just not working out for them. They don’t trust AI to write good code, as they see it creating more slop than value. They don’t think “vibe coding” is any good. I don’t blame them.

You’ve got to have quite a lot of pain tolerance to find a convergence point where your skills and AI skills work in sync. It’s a full time job and it requires as much attention as it took to learn how to code in the first place.

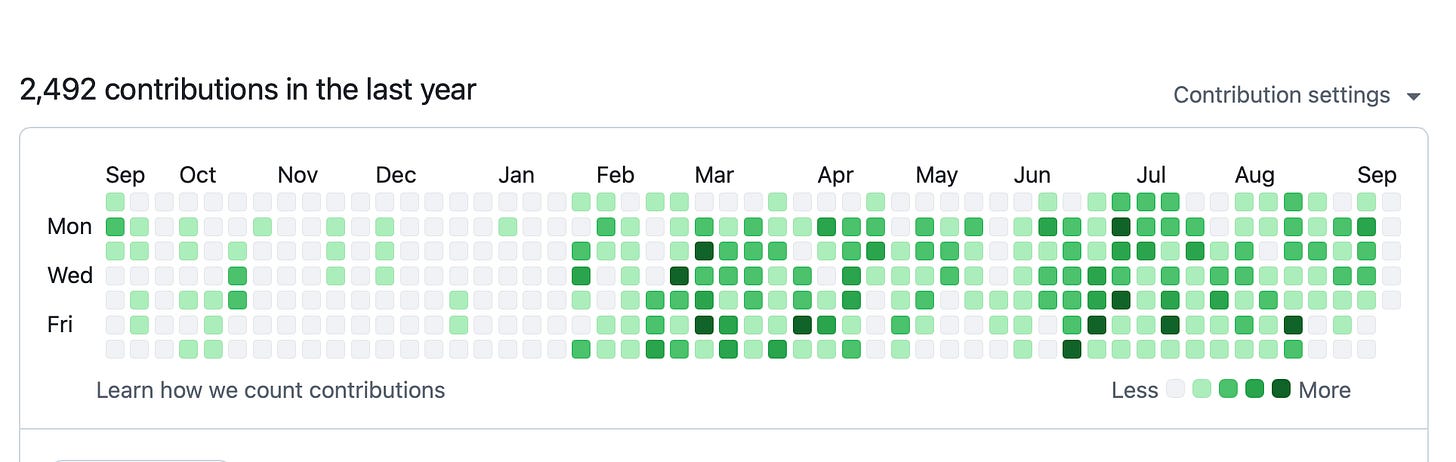

It’s taken me six months and a million lines of code to figure this out.

When I started vibe coding in February, I would dive into code with AI head first, expecting Cursor to churn out code while I sipped coffee. It worked, sometimes. Often thought it also caused a lot of rework and frustration.

Today, even though I spend nearly 100% of my time coding with AI, and AI writes 100% of my code, I also spend more time planning than actually writing code, a shift that’s transformed my workflow and results.

Planning as the Foundation

About three months ago, I started treating AI as a planning partner, not just a code generator. Now, I dedicate 60-70% of my time to defining features—breaking them into clear requirements, sketching UI flows, and mapping out logic. This upfront work ensures I know exactly what I’m building and why. This also means the AI knows exactly what we are building.

For those of you familiar with the “waterfall model of software development” this would look ironically familiar. In a waterfall, each phase must be completed before the next can begin, following a clear progression from requirements gathering, design, implementation, testing, and deployment. It works shockingly well for AI too.

The key difference today vs waterfall development 30 years ago, you can now just do things. You don’t have to have team mates, or meetings. You can get the knowledge you need, be that databases, design, sever architecture, whatever you need - all from your AIs. What previously took months, can now be done by agents, in hours.

Product development with AI is the best combination of speed (agile) and focus (waterfall). Many people will have their own unique flavor of how to do this. Here’s the method that has worked for me:

1. Figure out version-zero.

Scale it down to the basics, then scale it down further.

Whether you use the Scoutzie MVP Agent or just chat with your favorite LLM, get to the point where you can clearly explain every aspect of your proposed design such that the AI has no more questions about it. This doesn’t mean you have the exact answers to everything, but it means you are crystal clear about what goes into v0 and how it works and looks.

Use AI to help you. It’s okay not to know everything in this stage. Ask AI to suggest best practices. You’ve got to learn to let go and be okay with a solution that does what you need, but not necessarily how you’ve envisioned it. The outcome should be the focus, not the process.

MVP is something you do to test the concept and to make sure it works. It doesn’t have to scale to a million users, and it doesn’t have to have redundancy, or fancy architecture. Peter Levels famously runs his apps on a tiny server as a single PHP page. Whether you love it or hate it, be more like Peter.

2. Break your design into features.

Explain each feature in its own document. You don’t want to build a monolith, but a set of APIs that work together for a common good. It would be a lot easier for AI to create these features if they are not interconnected. You can connect them in the next step.

The better you can identify and explain each feature as its own document, the more satisfied you will be with your outcome.

Best practice here: Use detailed, contextual prompts. For example, instead of "write a login function," say "Implement a secure user authentication API using JWT in Go, handling email/password login, with error handling for invalid credentials and rate limiting to prevent brute-force attacks. Reference the user model from the attached schema."

I've asked AIs hundreds of questions like this, from "What's the best way to structure a React component for a dashboard?" to "How do I optimize database queries in PostgreSQL for high-traffic apps?" Drawing from those interactions, always include constraints, expected outputs, and examples to minimize rework.

3. Create a guide for the LLMs.

This is where you convert your previous MVP document that you created to explain the project to people into a document that explains the project to developers. Simply treat your large language model as one of your teammates and explain to it the task, what its role is in producing the output, and how you want to do the work.

You can start very basic with just one document that outlines all the features in it and tells the AI to go build. Overtime, I have create a more complex process where I actually have a number of agents with different roles.

First I have a project manager that will review my technical document. Then I will send it to a senior engineer to review and find any ambiguities. We correct the document, and turn it into a set of tasks for junior engineers, to perform asynchronously. We also create test parameters for every feature, and once the engineers are done, we make sure the tests pass …etc.

You get the point. Take a process that you would follow in any organization to create a product, and you do just that, except all of your coworkers are computers.

Pro Tip: Tell AI that you want all features to work in code, before they work in the interface. This means you should be able to execute your entire workflow end-to-end without ever opening the browser.

This feature is a huge life-saver because an interface can add a lot of complexity to an application. The smoother your want it to be, the more code. On the other hand, if you made the application work in the terminal, and you know it works and does what you want, then you can lock the logic part of application, and focus on design and interface separately.

AIs love to delete code, so the more isolated you can make your code, the less trouble you run into later, and the easier it is to spot issues.

4. Generate code for each isolated feature.

Once your features are broken down and documented, feed them one by one into your AI tool of choice—I've had great success with tools like Grok for brainstorming and Claude Code (in God mode) for actual code generation. Provide the AI with the feature documents, your overall project guide, and specific instructions on tech stack (e.g., Next.js for frontend, Go for backend if that's your niche).

Ask the AI to output code in small, testable chunks, and then make it test each chunk before committing to Git, each feature as its own commit.

Note: you don’t commit to git, in fact, you don’t touch your keyboard here at all. The AI does all the work, and all the commits.

5. Integrate features and resolve interconnections.

With individual features coded and tested in isolation, now connect them. This is where you might encounter conflicts—AI-generated code from different prompts might use inconsistent naming or patterns. AI can help here too: Prompt it to review the integrated codebase for inconsistencies, suggest refactors, or even generate integration tests. If things break, roll back and refine the original feature docs. Make a good use of git!

Best practice: Focus on becoming a hyper-expert in a narrow stack.

I’ve tried a lot of options, from self-hosted sqlite-based magic link auth built in Go, to paying for clouds APIs with Next.js to do everything for me.

To be honest, I am still learning, and I still don’t have a perfect answer, but I have found some tools that work better than others. Sticking to a particular stack also means you're not going to do some projects that you want to do because they rely on a different architecture, but this means you will be better and faster at doing other things. Choose your battles.

Keep a relentless focus on the end result, a functional app, and allow everything else to find its own steady state.

6. Test thoroughly, deploy, and gather feedback for iteration.

In my experience, this is where you gonna spend the most amount of time if you want things to be really good.

AI is great at generating lots of code fast, but it is not very good at nuance. You will end up with a code base that repeat itself, or codes that does the same thing in three different ways in three different places. You might have variables that overlap and cause ghost bugs, or deployment scripts that copy server from one project to deploy to the other. They're gonna be issues; you have to expect that there will be issues.

In my projects, I always do three things. I always make sure we do thorough testing locally, I then make sure I can run a production version of the environment locally, and only then do I finally deployed to production.

Personally, I only add a staging environment after the production environment is running successfully because until the first version of application is functioning hundred percent, there's no point at “staging” changes.

Guess what? Despite my best efforts, I always run into issues at every one of these stages. I don't think it's bad though because once again I'm trying to do a whole team's worth of work between me and the AI so it seems like a reasonable trade-off.

Best practice: Balance exploration (trying new AI features) with exploitation (sticking to what works).

7. Leverage AI for everything you do.

This is a catch22 type of suggestions, but for everything I’ve said to do above, you can use AI to help you, or to even do it for you. The only way to escape writing code by hand is to make sure your AIs are doing it to the quality that you can get behind. The only way to get there, without wasting cycles, is to enable your AI to write all of the above, from the planning, to tests, to execution, to documentation, to reviews.

I’ve got my process for this, you should have yours.

My Journey and Lessons Learned

My background in engineering has been turbocharged by AI. I've written over a million lines of AI-assisted code in last 12 months, building tools like tinylandingpage.com for quick website setups and the MVP Agent for product planning. Challenges? Plenty. The opportunity? Enormous.

I've peppered AIs with range from technical ("How to implement real-time updates with WebSockets?") to strategic ("Suggest best practices for scaling a solo dev project?"). I talk to Grok while I drive, learning whatever I am curious about in the moment. The answers shaped my process: Prioritize outcomes over ego, embrace automation for repetition, and refine relentlessly. AI is definitely making me smarter every day.

This AI-augmented waterfall-agile hybrid isn't for everyone, but it's slashed my development time from months to hours. If you're dipping into AI coding, start with planning—it's the unsung hero. Experiment, fail fast, and build your own process. What's worked for you? Share in the comments.

Follow me on X @kirillzubovsky for more AI dev insights, and check out QED for project ideas. Let's build smarter together.

Have an awesome day!

-Kirill Zubovsky.

ps. If you came from Hacker News, I’d appreciate an upvote.